This is a series of notes that I summarized online lectures from a NTU (National Taiwan University) professor, Hung-Yi Lee.

Link is here. The lectures were given in Mandarin Chinese.

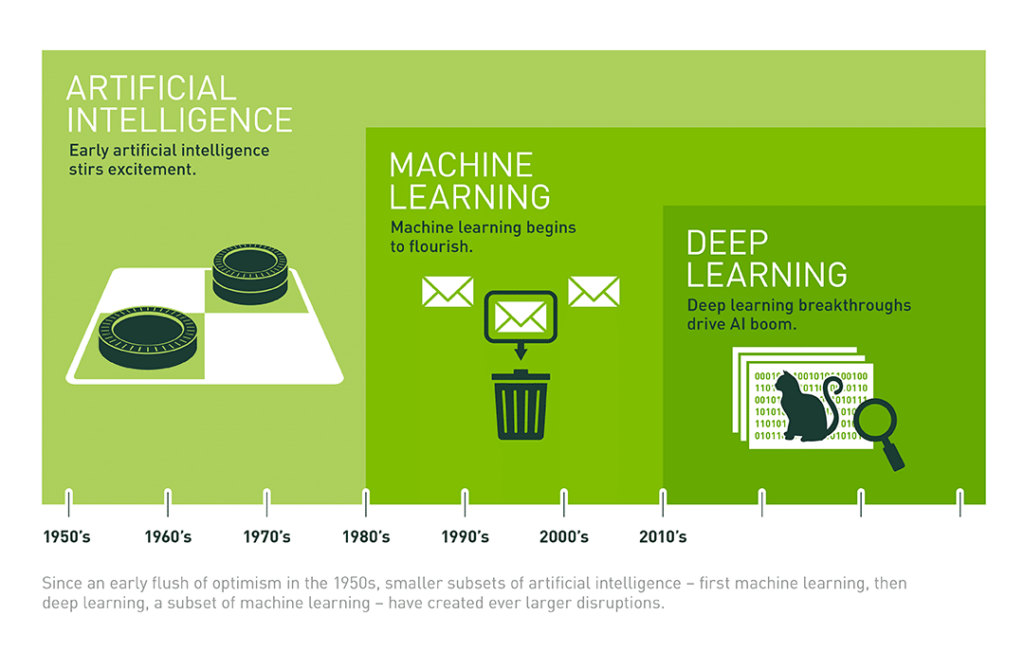

I really like this diagram that tells a lot. This diagram is particularly insightful as it succinctly conveys the hierarchical relationship between AI, machine learning, and deep learning. It clarifies that AI encompasses a broad category, within which machine learning constitutes a subset, and further, deep learning is a subset within the realm of machine learning.

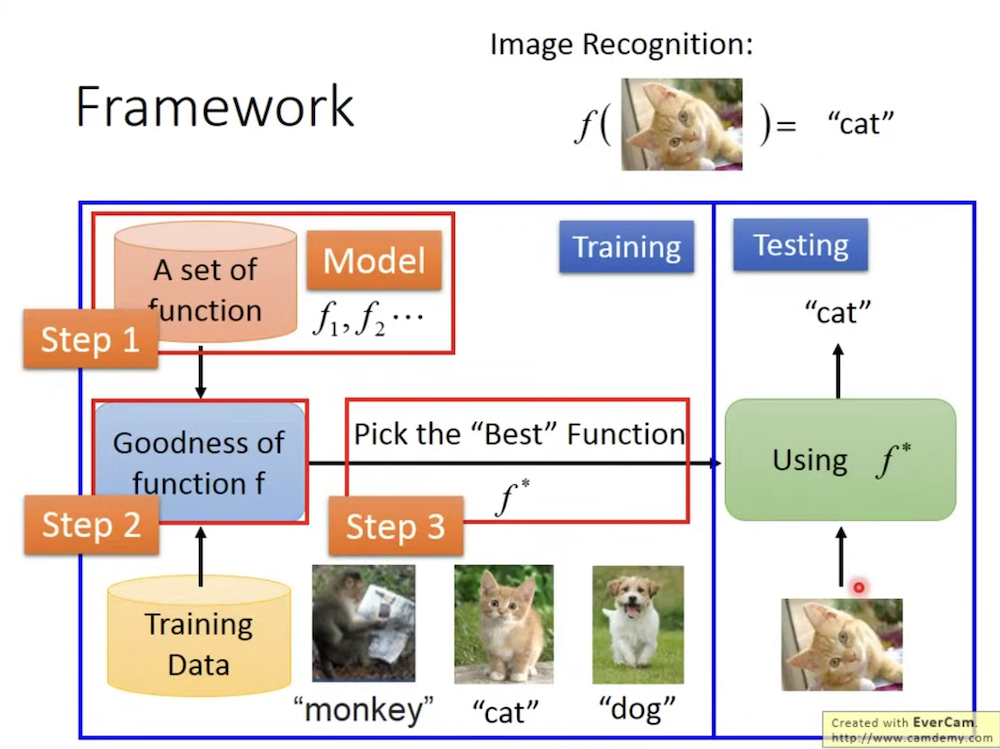

In simple words, machine learning is looking for a recipe or formula from a set of functions that can fit well with the give›n data.

The process can be broken down into 3 steps.

Step 1. Define a set of function

Step 2. Goodness of function

Step 3. Pick the best function

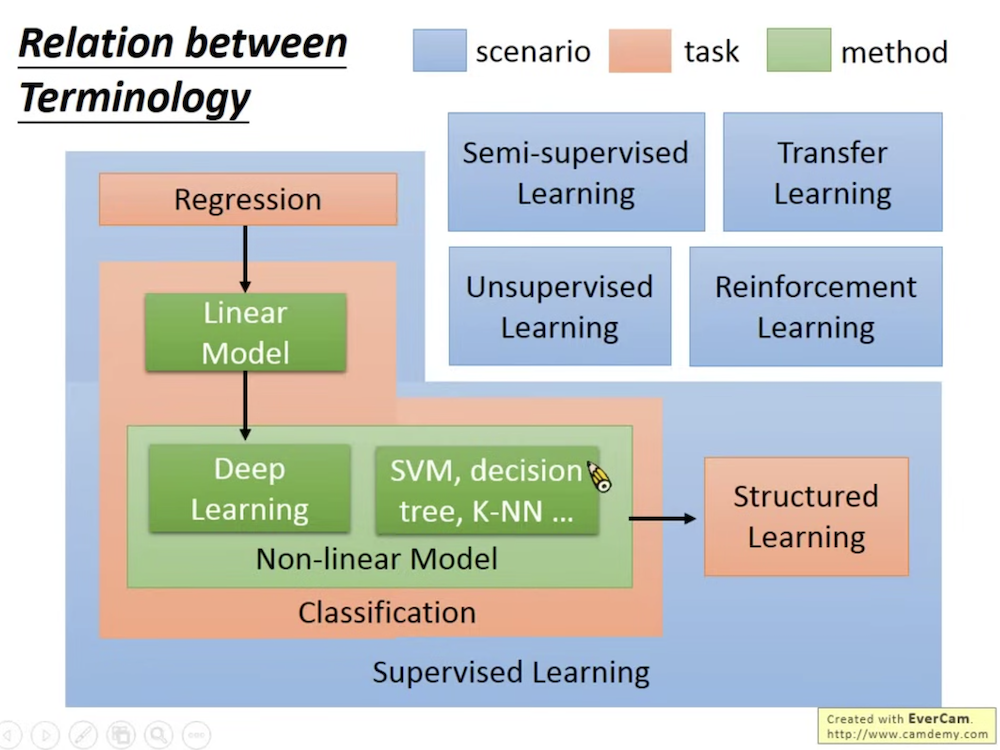

Supervised learning: input/output pair of target function which means the data should have answer (label)

Semi-supervised learning: Unlike supervised learning, semi-supervised learning aims for the data that doesn’t have everything labeled, some of it is unlabeled. The model uses labeled data to make predictions then refine its understanding of the unlabeled data.

Transfer learning: Unlike supervised learning and semi-supervised learning, it’s training from scratch. Transfer learning is a technique that taking a pre-trained model to re-use as a starting point on a different task. The idea is transfering knowledge gained from solved problem to a different but related problem.

Unsupervised learning: Training a model without any labeled output. The goal is learning to explore the inherent structure or pattern from the data.

Reinforcement learning: Supervised learning is learning from the labeled output, but reinforcement learning is learning from critics (feedback).

Structure learning: it aims for a complex problem. For example, speech recognition, machine translation, face recognition etc.

搶先發佈留言